Those who are sceptical about the worth of opinion polls were confirmed in their distrust when at the last UK general election the result upset all the predictions, writes Michael Marsh, Emeritus Professor, Trinity College Dublin.

While the polls generally agreed the result would be close, with Labour shading it to be the largest party, the Conservatives romped home with an overall majority.

The average poll figure for the Tories was more than 4% out, and for Labour 2% out in the other direction, so the gap between them was close to 7% rather than being tiny.

In any country this could be a polling failure, but in the UK system of first-past-the-post elections it was a disaster.

This prompted a major inquiry, with an initial report on what went wrong released yesterday. What did go wrong, and how likely is it that the same thing might happen here?

Before looking at the reasons, it is worth putting this 2015 polling result in context.

It was certainly not the first time the voters had confounded the polls. In 1992 the expected Labour victory for Neil Kinnock turned out to be a triumph for the Conservatives under John Major.

Getting the numbers wrong is a problem; getting the wrong winner is a crisis.

More generally, UK polls almost always underestimate the Tory vote and overestimate the Labour one.

In the past 14 general elections – over 50 years – the Conservatives were underestimated 11 times, with one correct.

The party has been underestimated in every election since 1981, when polls were perhaps unnecessary to tell us Thatcher would beat Michael Foot.

Labour’s vote has been overestimated in six of the past seven elections.

These errors are typically in the 2-5% range, indicating that the average error in the Conservative – Labour margin is around 6%, which can be up to well over 100 seats in Westminster.

UK pollsters adjusted their methods after the 1992 elections, following another inquiry.

Two changes were adopted by almost all UK companies: trying to ensure the sample is representative by adjusting it where necessary by people’s recall of their vote in the last election, and making some adjustment for those who were unlikely to vote.

There have also been changes in the ways polls have been conducted, with a shift to telephone and internet based polls and away from those carried out face-to-face, as well as a very dramatic increase in frequency, with almost 2,000 polls conducted 2010-15, and 200 during the short campaign itself.

If polls were right on average, there were more than enough to give an accurate result.

Many reasons have been advanced for the failure, with some of the more popular ones being the ‘shy Tories’ unwilling to own up to their partisanship because of some supposed cultural bias against the Conservatives; ‘late-swing’ (a staple in the book of excuses) and patterns of turnout, with declared Labour voters more likely to stay at home than those declaring for the Conservatives; and ‘herding’, where pollsters adjust their estimates to ensure they are not too far from the average of other polls.

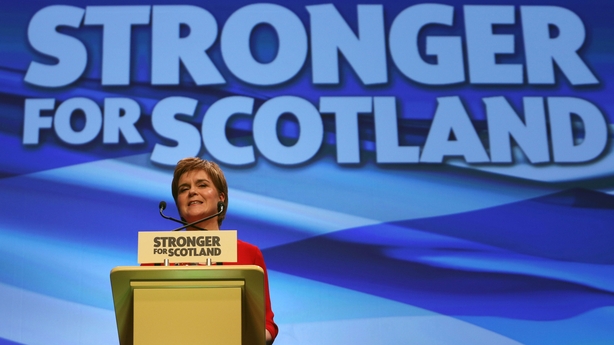

While allowing for perhaps a tiny late swing, perhaps prompted by fears of a Labour-SNP alliance in a hung parliament, the investigation discounted these as factors this time, on the basis that the evidence did not support these claims.

While allowing for perhaps a tiny late swing, perhaps prompted by fears of a Labour-SNP alliance in a hung parliament, the investigation discounted these as factors this time, on the basis that the evidence did not support these claims.

Instead they argued that the problem was that the samples collected by the pollsters were simply not representative. Of course, one might say: that is why they were wrong!

But the trick is to work out why they are unrepresentative and what can be done to improve that. Recommendations will be made in the final report in March but the team did offer pointers.

An important basis for the analysis was the set of polls carried out by the ‘gold standard’ method of probability sampling, where a sample is drawn of particular individuals (or households) and every effort is made to interview that person, calling back up to ten times if necessary.

This method is designed to ensure a sample that is representative of the population not simply in terms of known properties like age and gender (that can be checked against the census) but also unknown ones.

By contrast, the sampling methods employed in most polls manufacture a sample that is representative in terms of demographic characteristics.

The random probabilities method was employed for the British Election Study, an academic one well-funded by the UK research council, as well as the British Social Attitude Study, a similar exercise.

Both of these studies did find the ‘right’ proportion of Labour and Conservative voters, and differences between those samples and the commercial polling samples proved significant.

Significantly, it was found that Conservative voters were actually more difficult to contact.

Another difference is that the poll samples have too few older people: they have enough in the over 65 age bracket but in the UK, where the average voter is 55, the bracket is very large, and samples were too full of those under 70, with too few over 75, and the latter group are more conservative.

A further difference is that poll samples were too interested in politics, compared to the population, and again this is something which is tied to voting Conservative.

UK pollsters will have to address these sampling problems, not least for the Brexit referendum which could take place later this year. But what of Irish pollsters? Are they likely to encounter the same problems?

Political opinion polling has a much shorter history here, dating back really only to the 1980s, and there is nothing on the scale of some of the UK polling disasters (although referendum polling has had its problems).

Certainly we have seen differences between the average of final polls, or even all campaign polls, and the results.

In 2011 the simple average of the campaign was within 2% of each party’s final vote, overestimating Labour and Sinn Féin and underestimating Fianna Fáil. The final polls were 2% too low for FF and 2% too high for Fine Gael.

In 2011 the simple average of the campaign was within 2% of each party’s final vote, overestimating Labour and Sinn Féin and underestimating Fianna Fáil. The final polls were 2% too low for FF and 2% too high for Fine Gael.

2007 was a similar story: FG and FF both underestimated, FG by 1%, FF by more like 3%.

Polls overestimated FF for many years. A particular case was 2002, when most pollsters changed the way they asked the vote intention question during the campaign, giving people a ‘mock ballot’ instead with the names of the actual candidates running, expecting that to give better results.

The polls still overestimated FF by several percentage points, although the last pre-campaign poll by MRBI (in which the FF estimate was adjusted downwards) and a phone poll by a UK company, ICM, did come very close to the the final outcome.

In general, errors have been much smaller than those typical in the UK, and much less serious in terms of the gap between the biggest two parties.

That is not to say that the problems identified above, and those dismissed as issues in 2015, are not real ones for Irish pollsters.

Unlike the UK, no internet polls are published here, and a lot of polling is still face-to-face, a method now rarely used for published political polls in the UK.

But it is no less tricky to ensure a truly representative sample. Not all those with a voting intention will actually go to the polling station, the undecideds will not necessarily vote (if they do vote) in predictable ways, and it may be that supporters of some parties are ‘shy’ about expressing their allegiance.

Irish pollsters do use different methods in dealing with undecided voters and allowing for turnout and this is reflected in the results: those allowing for turnout differences, for instances, typically give lower estimates of support for SF. The polls could be misleading us.

However, it is worth remembering the the Irish Times commissioned polls during the 1977 election and did not publish them because the polls indicated an unprecedented overall majority of votes for Jack Lynch’s FF and "everyone" knew the FG–Labour coalition would be returned!

By Michael Marsh, Emeritus Professor, Trinity College Dublin