A photo of Pope Benedict wearing a puffer jacket, an image of Tom Cruise tripping up in a boutique, or a video of Ukranian President Volodymyr Zelensky telling his troops to lay down their arms - all made the news in recent months, and all were utter fabrications, produced by generative AI.

Despite its current newsworthiness AI is not, of course, brand new. In fact, if you have ever used sat nav or encountered an automatic phone service, you have already been assisted by artificial intelligence.

What has got the world talking in recent months however are developments in what is known as 'generative AI’, powerful tools like the chat bot ChatGPT which, thanks to their ease of use, have allowed members of the public to test them out and fully understand their capabilities.

But while some manipulated text or images are used for fun, others, such as the Zelensky video referenced above, are clearly far more sinister in nature.

Developments in generative AI has allowed the creation of disinformation – false information which is created and spread for malicious intent – to become both more plausible and easier to share.

Now, as major world events such as the US election campaign approaches, there are fears that disinformation generated by AI and shared on social media platforms could, quite simply, be used to change the course of history.

In this second report, I will be looking at the role of AI in generating ‘fake news’ and ask what can be done to protect consumers and how they can protect themselves.

"Right now, they're not more intelligent than us, as far as I can tell. But I think they soon may be."

This quote, which could have come straight from a science fiction film, is actually from Professor Geoffrey Hinton, known as the 'Godfather of AI’.

Professor Hinton resigned from Google earlier this year and then gave a number of interviews about the technology he had worked on for many years.

These warnings, coupled with relatively free access to technology like Chat GPT, has ensured that AI has been one of the major talking points across the world in recent months, leading to deep levels of concern about what the technology can do now, and will in the future.

However, Professor Barry O'Sullivan of the School of Computer Science at UCC sounds a more optimistic note.

He says he does not subscribe to the theory that AI poses an existential threat to humanity.

In fact, Professor O’Sullivan, who is also a past President of the European AI Association, fears that what he describes as 'dystopian’ science fiction scenarios could distract the public from engaging with the issues around artificial intelligence that need to be dealt with.

What is important, Prof O’Sullivan says, is that safe and reliable systems are built and do not amplify human biases.

He also stresses the importance of focusing on issues like inclusion and fairness, rather than whether the technology is going to go out of control in the future.

But while there is no doubt that AI has played a hugely positive role in areas, including medical research, generative AI’s capacity for the creation and spread of disinformation remains a concern.

Disinformation itself is not new, but new technologies have transformed the speed at which false information can be produced, whether that is through text, audio or deep fake videos, and also made it much more difficult to recognise.

'It's way more problamatic'

The speed at which this technology is developing is very evident to Razan Ibraheem, a journalist who specialises in investigating disinformation and the use of verification techniques.

Among the areas she is particularly concerned about is the manipulation of political content, and she also says that AI generated disinformation can be used to create tension in society by creating narratives that target groups such immigrants, religious minorities, the LGBTQ community or other vulnerable cohorts.

"It’s not just a photo of a pope or a celebrity," she says. "It’s way more problematic."

Spotting artificially generated content is now becoming more complex, with elements that might once have been easy to recognise, such as tone of voice, accent or hand movement, now becoming far more difficult to detect in more sophisticated creations.

As with other types of disinformation, the power of material generated by AI to persuade also depends on who receives the information, and at whom it is targeted.

Mark Kelly is the founder of AI Ireland, a group which aims to increase public awareness of artificial intelligence.

He says that the manipulation of public opinion by generative AI is most likely to happen when the disinformation conforms to existing biases in the listener or reader.

RuPublicans

The most successful type of manipulated content is also material that goes just a little further than the reader might suspect.

RuPublicans is an Instagram account that takes prominent Republican politicians such as Mike Pence and uses AI to generate images of them as drag queens.

The site is clearly satirical, and labelled as such, and although the images are startlingly realistic, it is highly unlikely that anyone would believe they were real.

However, as Mark Kelly points out, disinformation is more likely to be believed – and spread – if the content goes just ‘a little north’ of what the reader might expect, but remains within the realms of plausibility.

In March, for example, a number of images of Donald Trump appeared online, allegedly showing him being arrested, or running from police.

The images were fake but the fact that the former US President was, at that time, facing a court appearance leant the pictures an air of plausibility they wouldn’t have had previously.

So just what can be done to counter the effects of AI generated disinformation?

Some of the answers to that question lie with artificial intelligence itself.

Tools such as Google Image are among those used by researchers and others when they are trying to decide if material is fake, while Meta is just one company that has spoken of its use of AI, describing it as a 'critical tool' to help protect people from harmful content.

As Mark Kelly explains, AI can be used to analyse large volumes of textual and visual content and identify potential instances of disinformation.

'Human in the loop'

Technology can also detect patterns and inconsistencies that may indicate false and misleading information, while AI powered natural language processing techniques can be used to analyse content.

Mr Kelly says AI can also assist in detecting fake videos and highlighting manipulation or fabrication in areas, including facial expressions or lighting inconsistencies, for example.

However, it is also vital, he stresses, that there is a "human in the loop" in these scenarios.

Individuals can also take action when it comes to information they distrust.

In the case of images or video, often it can be enough just to look for visual cues – inconsistencies in the length of an arm for example, or a blurred line where a head joins a body.

Mr Kelly advises social media users to look for visual or audio inconsistencies that can indicate manipulation, such as unnatural movement or facial expressions.

If something ‘feels wrong’, he says then it usually is.

Razan Ibraheem spends her days researching problematic content on social media, and the steps she takes while carrying out this work include reverse image searches, and the analysis of street signs and other background information.

She does admit however that this work is becoming more complicated as the images she encounters become more lifelike.

Ms Ibraheem also looks at the source, where the image is coming from, and takes note if it has originated from an account that has spread false information in the past.

In terms of people making their own checks, she advises all users of social media to keep a ‘criticial eye’ on the information they receive and to be particularly vigilant about sharing images they are not sure about.

Even if you are sharing material because you have doubts about it, or to say you do not believe it, as to share is to amplify.

Ms Ibraheem also recommends that if you come across a statement from a politician, for example, that does not ring true, then it is worth going back to the source, maybe the politician’s own social media or party’s web page to see if it is being reported there, or on a traditional news website.

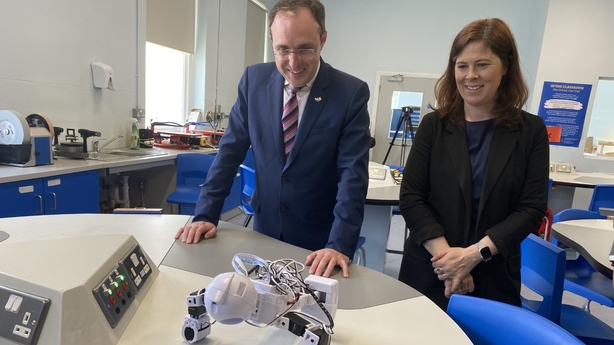

The need for individuals to keep a close eye on what they are reading, or sharing, is echoed by Dr Patricia Scanlon, who was appointed Ireland’s AI Ambassador in 2022.

Dr Scanlon has been working in this field for over 25 years and describes the recent release of ChatGPT as a ‘game changer’ which has allowed everyone to use the technology and see its potential.

We have, she says, reached a point where people are creating a lot of disinformation and the material is become more believable.

Dr Scanlon describes that incident as "a good lesson".

'Fake news'

We are reaching the point, she says, where people will get more cynical about everything they read and see on social media, and if this trajectory is followed then everything will be considered ‘fake news’ until you go back to a trusted source, and we will all be forced to use some form of verification.

Professor Barry O’Sullivan agrees that the issue for the individual goes back to the concept of media literacy and "understanding the provenance of things".

In fact, all of the experts I spoke to when writing this article mentioned the importance of traditional news sources in providing a service that consumers can trust.

Dr Scanlon also emphasised the importance of advising younger people, many of whom get most or all their news from social media, to learn how to check their sources.

Of course, the tech companies themselves also have a crucial role to play in checking the information they allow to be shared on their sites.

TikTok, for example say they use a combination of technologies and moderation teams to identify, review and, where appropriate remove content that violates its community guidelines.

While Meta says it uses a combination of consultation with outside experts, its own fact checking programme and international technical capabilities to address misinformation on its platform.

Much of this action is voluntary however and although tech giants including Meta and Google have signed up to the EU’s voluntary code on disinformation, last month, the EU said Twitter had withdrawn.

As referenced by the EU's Commissioner for Internal Market Theirry Breton however, legislation is coming down the line to move such regulation out of the voluntary space.

All members states are required to have designated a ‘Digital Services Coordinator’ in place by 2024 and in Ireland this role is being filled by Coimisiún na Meán.

Also in the pipeline is the EU AI Act, described as the first ever law to deal with Artificial Intelligence.

Keep humans at the centre

According to Dr Scanlon the AI Act seeks to keep humans at the centre of the issue and regulate to protect their safety.

She says that although this is EU legislation, the US is watching its development very carefully and that when in place it will have a global impact, as any company wanting to operate in the massive EU economy will have to comply.

Among the issues addressed by the AI Act will be transparency, and the need to let people know if they are interacting with a human or a bot, or if content they are accessing was created by AI.

Issues including the use of copyright material will also be addressed.

The role of legislation, and the regulation of tech companies will form part of another article in this series.

The EU Artificial Intelligence Act takes a ‘risk based’ approach to regulating AI is expected to come into force near the end of 2023 and providers of AI systems will be expected to comply within 24 or 36 months after that date.

This is of course a rapidly changing environment, and with technology improving by the day, some observers have questioned why it has taken so long for the legislation to be put in place.

Dr Scanlon says its important legislators be given time to complete their work and says the fact that the work is ongoing means that emerging technologies can be included.

That the spread of disinformation is being accelerated by the growth in generative AI technologies is beyond doubt, and it is clear that even as legislation is being developed, the onus will remain on the individual consumer to be on their guard as to the information they are receiving and, crucially, sharing.

However, Dr Barry O’Sullivan believes that one positive to come out of the recent explosion of interest in the area is that people are now aware of the issue and discussing it.

People are afraid at the moment, he admits, because of the advances in generative AI and what it can do, and of course it can be misused.

The good news is that we are all talking about it, it is not going to creep up on us.

Knowledge, as ever, is power.